EVENT & MESSAGING SERVICE

Event Grid, EventHubs, vs Service Bus

| Service | Purpose | Type | When to use |

|---|---|---|---|

| Event Grid | Reactive programming | Event distribution (discrete) | React to status changes |

| EventHubs | Big data pipeline | Event streaming (series) | Telemetry and distributed data streaming |

| Service Bus | High-value enterprise messaging | Message | Order processing and financial transactions |

MESSAGES

Azure supports two types of queue mechanisms:

- Service Bus queues

- Storage queues.

Service BUS Queue

Fully managed enterprise message broker with message queues and

publish-subscribetopics.

- Supports: .NET, Java, JavaScript, & Go SDKs provide push-style API.

- Protocol: Advanced Messaging Queueing Protocol (

AMQP-1.0). AMQP allows integration with with on-premises brokers such as ActiveMQ or RabbitMQ. - Autodelete on idle: specify an idle interval after which a queue is automatically deleted. Min duration : 5 minutes.

Service Bus Premium is fully compliant with the Java/Jakarta EE Java Message Service (JMS) 2.0 API.

Message: transfer any kind of information, including: JSON, XML, Apache Avro, Plain Text.

Features

- Receive messages without need to poll the queue. Uses long-polling receive operation.

- Guaranteed first-in-first-out (FIFO) ordered delivery.

Deduplicate: Automatic duplicate detection.- Process messages as parallel long-running streams (messages are associated with a stream using the session ID property on the message). In this model, each node in the consuming application competes for streams, as opposed to messages. When a stream is given to a consuming node, the node can examine the state of the application stream state using transactions.

- Transactional behavior and atomicity when sending or receiving multiple messages from a queue.

- Messages that can exceed 64 KB but won't likely approach the 256-KB limit.

Usage

- Messaging: Transfer business Data between apps & services

- Decouple applications & service: reduce coupling

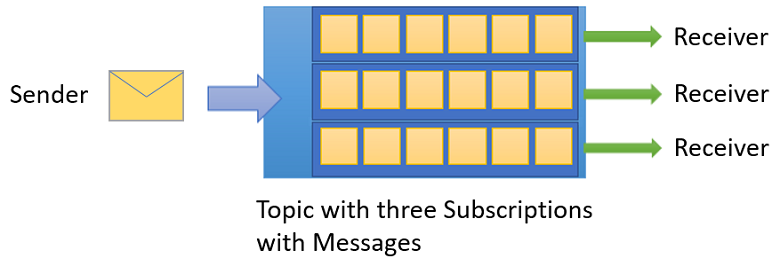

- Topics and subscriptions: 1:Many relationships between pub/sub.

- Message sessions. message ordering guaranteed (FIFO)

Price Tiers:

| Standard | Premium |

|---|---|

| Variable throughput | High throughput |

| Variable latency | Predictable performance |

| Pay as you go variable pricing | Fixed pricing |

| N/A | Ability to scale workload up and down |

| Message size up to 256 KB | Message size up to 100 MB |

Storage queues

- Must store over

80GBof messages in a queue. - Track progress for processing a message in the queue.

- Server side logs of all of the transactions executed against your queues.

| Comparison Criteria | Storage queues | Service Bus queues |

|---|---|---|

| Ordering guarantee | No | Yes - First-In-First-Out (FIFO by using message sessions) |

| Delivery guarantee | At-Least-Once | At-Least-Once (using PeekLock receive mode Default), At-Most-Once (using ReceiveAndDelete receive mode) |

| Atomic operation support | No | Yes |

| Receive behavior | Non-blocking | Blocking with or without a timeout(offers long polling, or the "Comet technique"), Non-blocking(using .NET managed API only) |

| Push-style API | No | Yes |

| Receive mode | Peek & Lease | Peek & Lock, Receive & Delete |

| Exclusive access mode | Lease-based | Lock-based |

| Lease/Lock duration | 30 seconds (default) | 7 days (maximum), 30 seconds (default) |

| Lease/Lock precision | Message level | Queue level |

| Batched receive | Yes | Yes |

| Batched send | No | Yes |

EVENTS

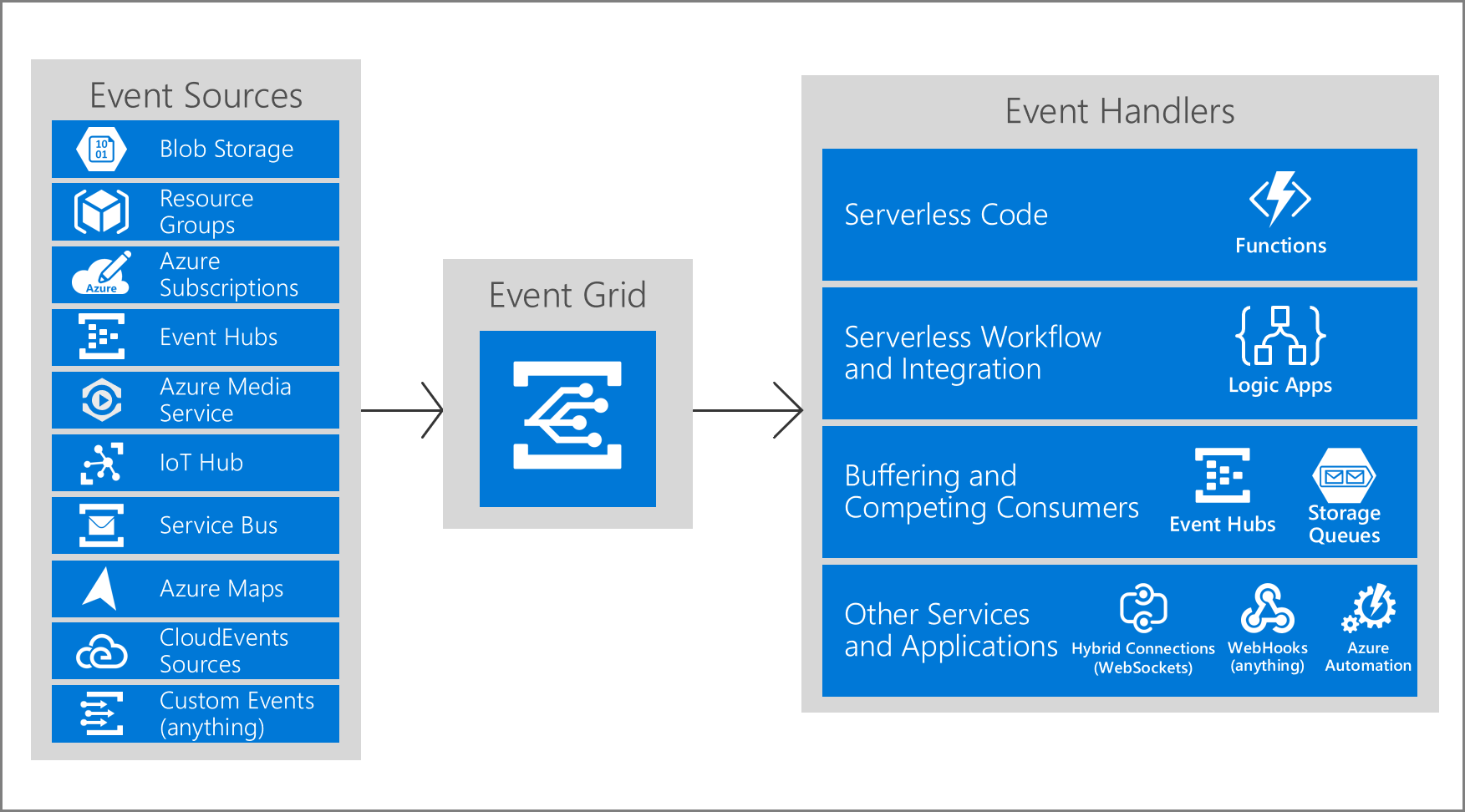

Azure EVENT GRID

A network to route Events between application

- Route custom events to different endpoints.

Components:

There are five concepts

1. Events

The information that happened in the system.

- Size:

64 KBcovered by GA-SLA. - Preview: Max Size

1MB,64-KBincrements. 413 Payload Too Large: When Event Size is bigger than max limits

Each event contain :

Must have Fields

id: unique IDeventType: registered event types for this event source.eventTime: timestampsubject: Publisher-defined path to the event subject.

Optional Field

-

topic: Full resource path to the event source. -

data: data specific to the resource provider. -

dataVersion: schema version. -

metadataVersion: schema version of the event metadata.[{ "topic": string, "subject": string, "id": string, "eventType": string, "eventTime": string, "data":{ object-unique-to-each-publisher }, "dataVersion": string, "metadataVersion": string }]

Custom Header

- Can upto

10custom headers. - Each header value shouldn't be greater than

4,096 (4K)bytes.

Works with Target:

- Webhooks

- Azure Service Bus topics and queues

- Azure EventHubs

- Relay Hybrid Connections

2 Event sources

Where the event comes from.

3. Topics

It provides an endpoint where the publisher sends events.

- Collection of related events

- Must provide a SAS token or key authentication before publishing a topic.

4. Event subscriptions

Filter the events that are sent to you.

5. Event Handlers

The app or service that will process the event.

- For HTTP webhook event handlers, the event is retried until the handler returns a status code of 200 – OK.

- For Azure Storage Queue, the events are retried until the Queue service successfully processes the message push into the queue.

Access Control to events

Event Grid uses Azure role-based access control (Azure RBAC).

| Role | Description |

|---|---|

| EG Contributor | Can create and manage Event Grid resources. |

| EG Subscription Contributor | Can manage Event Grid event subscription operations. |

| EG Data Sender | Can send events to Event Grid topics. |

| EG Subscription Reader | Can read Event Grid event subscriptions. |

Microsoft.EventGrid/EventSubscriptions/Write must have permission on the resource that is the event source. This

permissions check prevents an unauthorized user from sending events to your resource.

Webhooks

EventGrid POSTs an HTTP request to the configured endpoint with the event in the request body.

Validation

End point must validate ownership before getting events form EventGrid

AutoValidation: Azure infra automatically handles validation for

- Azure Logic Apps with Event Grid Connector

- Azure Automation via webhook

- Azure Functions with Event Grid Trigger

Validation handshake

1. Synchronous handshake:

- Supported in all Event Grid versions.

Validation Steps:

- Event Grid sends

validationCodeproperty as Data to Subscription validation event to HTTP endpoint. - App verifies that the validation request is for an expected event subscription, and synchronously returns the

validationCodein the response.

2. Asynchronous handshake

- Used with third-party service (like Zapier or IFTTT) where you can't programmatically respond with the validation code.

3. Manual validation handshake

2018-05-01-preview or later with SDK or tool that uses API version

Steps:

- Event Grid sends a

validationUrlproperty in the data portion of the subscription validation event to HTTP endpoint. - Perform a GET request to validationUrl using REST client or your web browser. do a.

- The provided URL is valid for

5 minutesduring provisioning state of the event subscription isAwaitingManualActionafter that it becomeFailed

Event Filter

By default, all event types for the event source are sent to the endpoint. We can filter events based on

1. Event types

Provide an array with the event types, or specify All to get all event types for the event source.

The JSON syntax for filtering by event type is:

"filter": {

"includedEventTypes": [

"Microsoft.Resources.ResourceWriteFailure",

"Microsoft.Resources.ResourceWriteSuccess"

]

}

2. Subject filtering

Specify a starting or ending value for the subject

"filter": {

"subjectBeginsWith": "/blobServices/default/containers/mycontainer/log",

"subjectEndsWith": ".jpg"

}

3. Advanced fields and operators

Filter by values in the data fields and specify the comparison operator

Allows

-

operator type- The type of comparison.

-

key - The field in the event data that you're using for filtering. It can be a number, boolean, or string.

-

value or values - The value or values to compare to the key.

"filter": { "advancedFilters": [ { "operatorType": "NumberGreaterThanOrEquals", "key": "Data.Key1", "value": 5 }, { "operatorType": "StringContains", "key": "Subject", "values": ["container1", "container2"] } ] }

Output batching

Batch events for delivery for improved HTTP performance in high-throughput scenarios.

-

Turned off by default and can be turned on per-subscription via the portal, CLI, PowerShell, or SDKs.

-

the batch size may be smaller if more events aren't available at the time of publish. Event Grid doesn't delay events to create a batch if fewer events are available.

-

It's possible that a batch is larger than the preferred batch size if a single event is larger than the preferred size.

Batched delivery has two settings:

1. Max events/ batch

The Maximum number of events Event Grid will deliver per batch.

- Must be between

1-5k - Batch size never exceed defined limit/batch

2. Preferred batch size in kilobytes

Target ceiling for batch size in kilobytes.

- If event size > batch size. It will be sent in its own event.

Retries Strategy

Durable delivery. tries to deliver each event at least once for each matching subscription immediately. In case of failure it perform retries.

Retry policy

customize the retry policy when creating an event subscription by using the following two configurations.

An event will be dropped if either of the limits of the retry policy is reached:

- Max number of attempts - integer between

1 and 30. Default:30. - Event time-to-live (TTL) - integer between

1 and 1440. Default:1440 minutes

Dead Letter Queue(DLQ): storage account holding events that are failed to deliver for further Analysis

- Event Grid drop the event if dead-letter isn't configured.

- To enable specify a storage account to hold undelivered events when creating the event subscription.

- If DLQ is unavailable for

4 hoursbecause of some reason, the event will be dropped.

Event Grid Send Event to DLQ in one of the conditions:

- Event isn't delivered within the TTL period.

- Retries exceeds the limit.

ERROR HANDLING

Exponential Backoff: if endpoint experiences delivery failures, Event Grid will begin to delay the delivery and retry of events to that endpoint.

- If error is in list below. Retry doesn't happen.

| Endpoint Type | Error codes |

|---|---|

| Azure Resources | 400 Bad Request, 413 Request Entity Too Large, 403 Forbidden |

| Webhook | 400 Bad Request, 413 Request Entity Too Large, 403 Forbidden, 404 Not Found, 401 Unauthorized |

- If error is not in list above Event Grid waits

30 secondsfor a response and perform an Exponential Back-off retry. - If the endpoint responds within

3 minutes, Event Grid will attempt to remove the event from the retry queue on a best effort

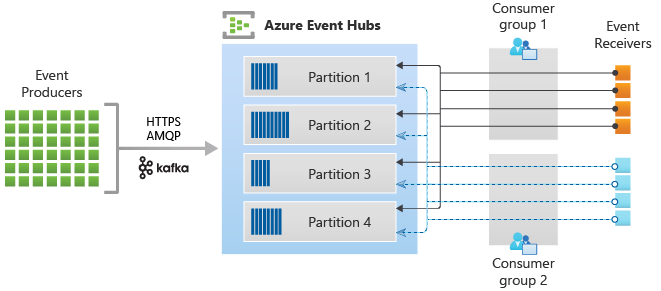

Azure EventHub

"front door" for an event pipeline, often called an event ingestor in solution architectures.

event ingestor

is a component or service that sits between event publishers and event consumers to decouple the production of an event stream from the consumption of those events.

Features:

- Fully managed

Platform-as-a-Service (PaaS): eg EventHubs for Apache Kafka fully manged Kafka Cluster - Enables you to receive and process millions of events per second.

- Real-time and batch processing: EventHubs uses a partitioned consumer model, enabling multiple applications to process the stream concurrently and letting you control the speed of processing.

- Scalable Scaling options, like Auto-inflate, scale the number of throughput units to meet your usage needs.

Usage:

- Unified streaming platform with time retention buffer, decoupling event producers from event consumers.

- Big data streaming and Event ingestion service.

Key Concept

EventHub Client:

The primary interface for developers interacting with the EventHubs client library.

EventHub producer

Client that serves as a source of telemetry data, diagnostics information, usage logs, or other log data

- Example: IOT, an App, Website

EventHub consumer

Client which reads information from the EventHub and allows processing of it.

- Example: Azure Stream Analytics, Apache Spark, or Apache Storm.

Processing: aggregation, complex computation and filtering, distribution or storage of the information in a raw or transformed fashion.

Partition

Partitions are a means of data organization associated with the parallelism required by event consumers.

- Ordered sequence of events that is held in an EventHub. New events are added to the end of this sequence

- Partitions number

- Must be between 1 and the max partition count allowed for each pricing tier.

- Specified at the time an EventHub creation and cannot be changed.

Partitioned consumer pattern: each consumer only reads a specific subset, or partition, of the message stream.

Consumer group

is a view of an entire EventHub. Consumer groups enable multiple consuming applications to each have a separate view of the event stream, and to read the stream independently at their own pace and from their own position.

- There can be at most

5 concurrent readers/ partition/ consumer group;Recommended1 concurrent readers/ partition/ consumer group - Each active reader receives all of the events from its partition; if there are multiple readers on the same partition, then they will receive duplicate events.

Event receivers

Any entity that reads event data from an EventHub.

- The EventHubs service delivers events through a session as they become available.

- All EventHubs consumers connect via the

AMQP 1.0session. - All Kafka consumers connect via the

Kafka protocol 1.0and later.

Throughput units or processing units:

Pre-purchased units of capacity that control the throughput capacity of EventHubs.

1TU = 1 MB/Sec or 1k events/Secingress,or 2X egress- Standard EventHubs: 1-20 throughput units. More can be added by quota increase request.

- Usage beyond your purchased throughput units is throttled.

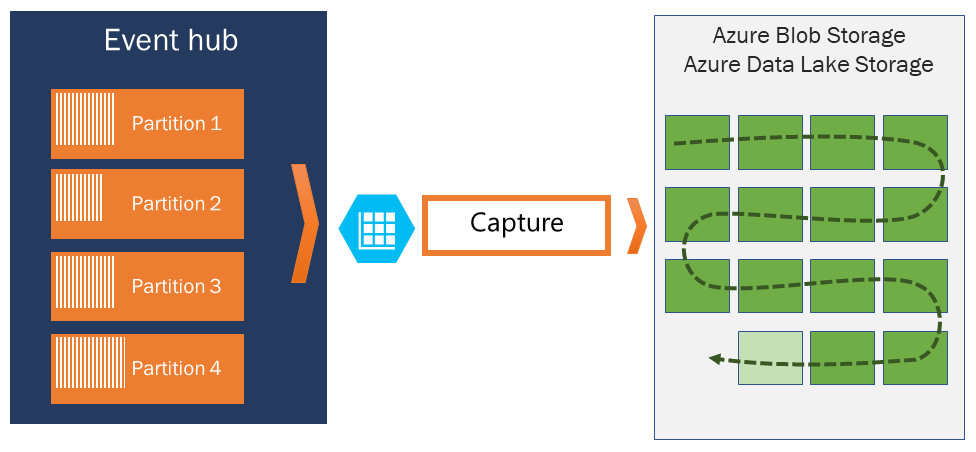

EventHub Capture

Automatically capture the streaming data in EventHubs in an

Azure Blob storageorAzure Data Lake Storage(Gen1/2)account of your choice, with the added flexibility of specifying a time or size interval

- Scales automatically with EventHubs throughput units in the standard tier or processing units in the premium tier.

- Captured data is written in

Apache Avro format: a compact, fast, binary format that provides rich data structures with inline schema. - Each

partitioncaptures independently and writes a completed block blob at the time of capture - Capture Event accounts can be in the same region as your EventHub or in another region.

-

This window is a minimum size and time configuration with a "first wins policy,"

-

Stored data naming

{Namespace}/{EventHub}/{PartitionId}/{Year}/{Month}/{Day}/{Hour}/{Minute}/{Second}

Security

Supports both AAD and shared access signatures (SAS) to handle both authentication and authorization.

Built in Role:

| Role | Access |

|---|---|

| Azure EventHubs Data Owner | Complete access to EventHubs resources. |

| Azure EventHubs Data Sender | Send access to EventHubs resources. |

| Azure EventHubs Data Receiver | Receiving access to EventHubs resources. |